I like to model AI assistants using two models: it’s either a Romi, or a Tachi. Let me explain.

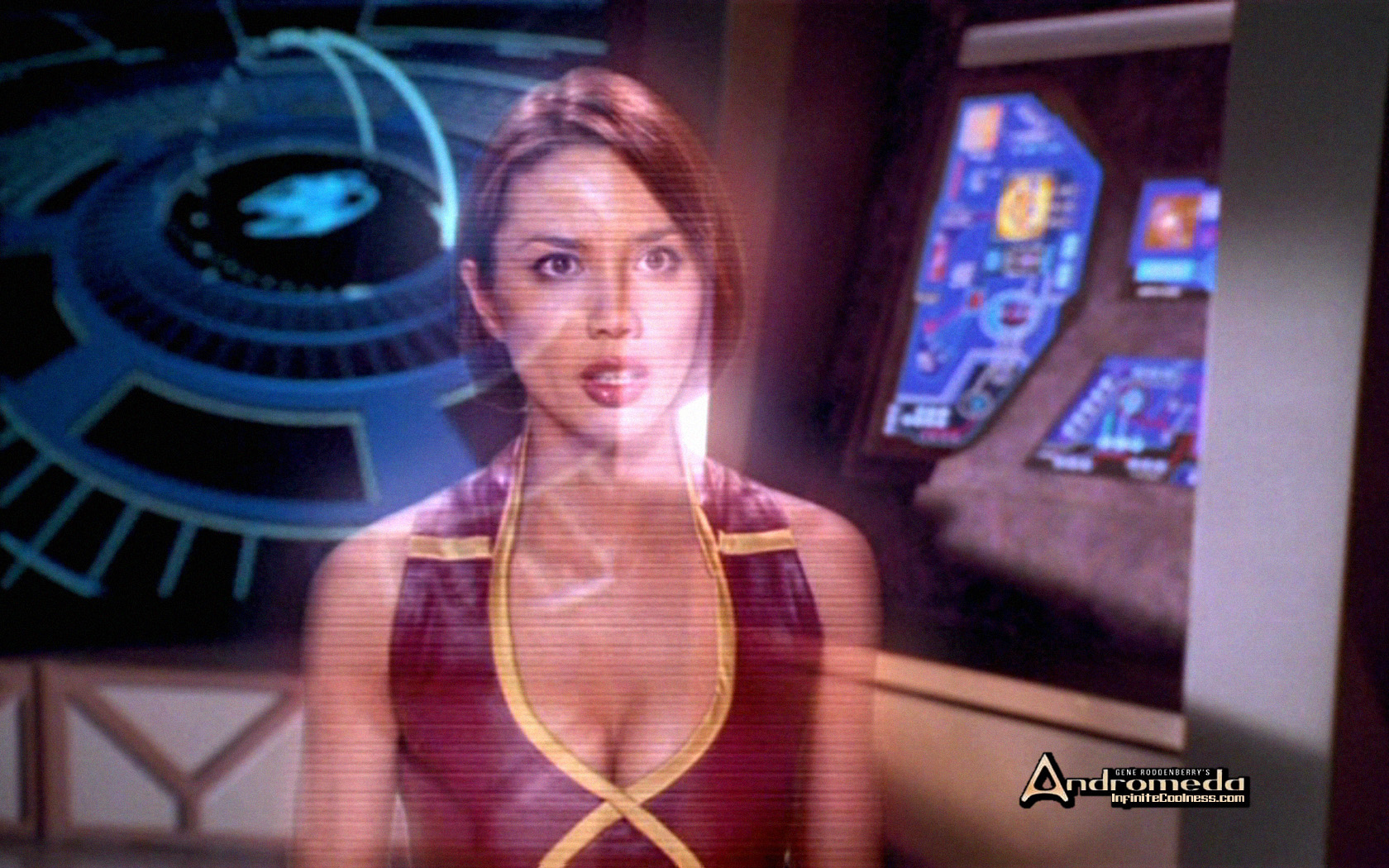

Romi

Andromeda (from the scifi TV show of the same name, nicknamed “Romi”) is a sentient AI that embodies the warship, the Andromeda Ascendant. She isn’t an AI controlling the ship, she is the ship. She converses with crew, takes orders (as an officer of the Highguard), and fulfills roles not presently filled by humans. For all intents and purposes, in fact, she is a part of the crew herself, where her role is to be a warship.

Romi is an example of an anthropomorphic, “delegate” AI agent. She receives orders, and she carries them out, while simulating the communication style humans are used to among themselves. When the captain asks for a status report of damage to the ship, she reports as any other officer would, by barking out which sections of the ship are damaged.

Her interface is the same as it would be with a human. This is the model that Siri and Alexa fit in: you ask it to do things, it does it and talks back to you.

Tachi

The Tachi (or rather, the Rocinante, as it was named after legitimate salvage, but it didn’t rhyme, so) is a state of the art corvette-class gunship of the Martian Congressional Republic Navy, from the book series and TV show The Expanse. The Tachi also has AI helping control the ship, but you never see an anthopomorphic AI agent throughout the entire series.

Instead, the AI systems aboard ships like the Tachi are able to understand human intent very well, and fashion their more typical touchscreen/holographic user interfaces to accomodate human control. You might see a ship crewman say “Show me where all the ships in the vicinity are”, and a map of nearby ships displays on screen, “wider” and the map zooms out to show longer range, “which of these ships has a manifest heading to Ceres?”, and the UI now highlights the relevant ships and shows their ETA to the Ceres asteroid.

At no point, on the Tachi, does an AI ever “talk” to humans. It understands humans perfectly from natural language, and even subtler cues (in the books, it is often described that a crewman doesn’t even have to trigger comms to talk to another person, the AI anticipates it and connects them by the time they start speaking). But, it seeks to be out of the way of humans running things, and presenting information in the far denser communication medium than speech: visuals via dynamic user interfaces.

Taci is probably the better goal today

I think the Tachi model is a better model for most people building AI assitants today. Reason being, there is a harsh uncanny valley problem with the Romi model, and most people actually don’t want to have to interact with humans for a lot of tasks, actual human or simulated. As AI advances, perhaps we cross a chasm where people would actually feel comfortable talking to a Romi, but the bar is super high, and even GPT-4 fails to meet it yet, in my opinion.

I think in the near term, you’re more likely to create something more like the “virtual intelligences” from Mass Effect, which anyone who’s played the games knows are super annoying. Basically personified versions of the “press 1 for English” IVR phone systems.

The Tachi model, when executed well, maximizes understanding human intent and executing human wishes, while delivering information and getting out of their way as efficiently as possible. Thought differently, the Romi model wants to be a character in your show, the Tachi model doesn’t seek to take any airtime at all, it has no lines.